This is a re-post of an article by Cal Wick of Fort Hill. The original was on the 70-20 Blog site. There are a few observations from me at the bottom. (first published August 2016).

Calhoun Wick

Cal is deeply experienced and knowledgeable in the area of workplace learning. He has been studying and supporting it for many years and is co-author of the highly acclaimed Six Disciplines of Breakthrough Learning: How to Turn Training and Development into Business Results (Pfeiffer, 2010). Cal’s company has also developed the 70-20 tool, which supports learning in the workflow in innovative and measurable ways – it is well worth test driving.

Cal is deeply experienced and knowledgeable in the area of workplace learning. He has been studying and supporting it for many years and is co-author of the highly acclaimed Six Disciplines of Breakthrough Learning: How to Turn Training and Development into Business Results (Pfeiffer, 2010). Cal’s company has also developed the 70-20 tool, which supports learning in the workflow in innovative and measurable ways – it is well worth test driving.

Learning through Conversation – April 2016

Skype discussion between Cal Wick,

Bob Eichinger

and Charles Jennings

70-20-10: Origin,Research, Purpose

by Cal Wick

------------------------

Where It All Began

The 70-20-10 model has been part of the corporate learning and development lexicon for decades. Some people find implementing 70-20-10 brings transformational change to their corporate learning cultures. Others are not quite sure what to make of it or how to leverage the model. A last group discounts it claiming 70-20-10 has no research to back it up and that it provides little value because the numbers are not accurate.

Recently I had a conversation with Bob Eichinger, one of the original thought leaders who created the 70-20-10 model, about its origin, research, and purpose. I found what Bob said to be so compelling that I asked him to write it up. Bob agreed.

Here is what he shared:

To Whom It Apparently Concerns, (Bob Eichinger writes)

Yes Virginia, there is research behind 70-20-10!

I am Robert W. Eichinger, PhD. I’m one of the creators, along with the research staff of the Center for Creative Leadership, of the 70-20-10 meme [the dictionary defines a meme as an “idea, behavior, or style that spreads from person to person”]. Note: see The Leadership Machine, Michael M. Lombardo and Robert W. Eichinger, Lominger International, Inc., Third Edition 2007, Chapter 21, Assignmentology: The Art of Assignment Management, pages 314-361.

We were working on a section of the course on planning for the development of future leaders. One of the study’s objectives was to find out where today’s leaders learned the skills and competencies they were good at when they got into leadership positions.

The study interviewed 191 currently successful executives from multiple organizations. As part of an extensive interview protocol, researchers asked these executives about where they thought they learned things from that led to their success – The Lessons of Success. The interviewers collected 616 key learning events which the research staff coded into 16 categories.

The 16 categories were too complex to use in the course so we in turn re-coded the 16 categories into five to make them easier to communicate.

The five categories were learning from challenging assignments, other people, coursework, adverse situations and personal experiences (outside work). Since we were teaching a course about how to develop effective executives, we could not use the adverse situations (can’t plan for or arrange them for people) and personal experiences outside of work (again, can’t plan for them). Those two categories made up 25% of the original 16 categories. That left us with 75% of the Lessons of Success for the other three categories.

So the final easy-to-communicate meme was: 70% Learning from Challenging Assignments; 20% Learning from Others; and 10% Learning from Coursework. And thus we created the 70-20-10 meme widely quoted still today.

The basic findings of the Lessons of Success study have been duplicated at least nine times that I know of. These include samples in China, India and Singapore and for female leaders, since the original samples of executives in the early 80s were mostly male. The findings are all roughly in line with 70-20-10. They are 70-22-8, 56-38-6 (women), 48-47-5 (middle level), 73-16-11 (global sample), 60-33-7, 69-27-4 (India), 65-33-2 (Singapore) and 68-25-7 (China). A number of companies including 3M have also replicated the study and found roughly the same results.

So some have said that 70-20-10 doesn’t come from any research. It does. Some have said the 70-20-10 is just common sense. It is now. Experience has always been the best teacher. Still is.

I might add that there is a lot of variance between organizations and levels and types of people. These studies were mostly about how to develop people for senior leadership positions in large global companies. The meme for other levels of leadership and different kinds of companies might be different. There might also be other memes for different functional areas.

Sincerely,

Bob

From My Perspective (Cal Wick writes)

From my perspective, Bob and Mike’s genius was to take the 16 sources of learning present in the 616 key learning events, as recounted by the participants in the Lessons of Success study, drop out the 25% of learning that comes from hardship and beyond work, and turn the remainder into a meme of three sources of learning now known around the world as 70-20-10. As a meme or reference model, it both validates the importance of Formal Courses – the “10” as well as opening up the opportunity of intentionally activating Learning from Challenging Assignments – the “70” and Learning from Others – the “20.”

Implications

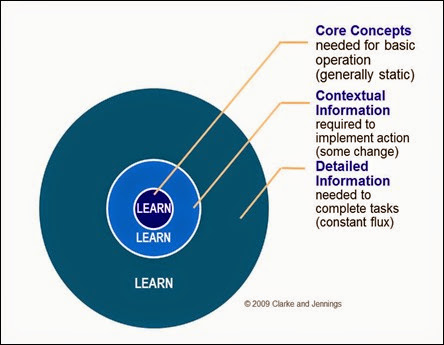

1. Bob and Mike’s 70-20-10 meme made visible that learning takes place both in formal settings (the 10) as well as in experience (the 70) and through relationships (the 20). As a model, its value is not in trying to determine with precision the exact numbers to the left or right of a decimal point, but instead to use it to open our eyes to learning that is happening all the time on-the-job, but is largely invisible.

2. When 70-20 learning becomes visible and intentional, the implication is that Learning & Development has the opportunity to harness its potential. The challenge is how can L&D activate and support informal and social learning in an intentional, high impact way that builds a vibrant learning culture? And this learning culture leads to higher performance as employees embrace continuous development on the job. The 70-20 learning of today’s workforce is largely self-directed. Just look at the web searches you have done in the last week. The opportunity for L&D is to add value by making available the resources, people, expertise and digital tools to support and accelerate the 70-20 learning that happens every day and everywhere.

3. It turns out that there is now significant research that supports the reality and value of learning

This is a very exciting time in our industry and we’re delighted to be part of the conversation and the exploration of new strategies to drive competitive advantage and improved performance through 70-20 learning.

My Observations (Charles Jennings writes)

There’s no doubt the work of Bob Eichinger, Mike Lombardo and the team at the Center for Creative Leadership was fundamental in highlighting a critical fact – that most learning, most of the time, comes not from courses and programmes, classrooms, workshops and eLearning, but from everyday activities. In other words, we learn more from working and interacting with fellow workers than we do from away-from-work training.

This research set the ‘70:20:10 ball’ rolling, and Bob’s explanation above answers many questions that I’ve heard raised over the years.

It’s also important to recognise, as Cal Wick points out above, that many other researchers have also identified the importance of learning beyond the ‘10’. There is an increasing body of research and empirical evidence that the closer to the point of use any learning occurs then the more likely it is to be turned into action.

Jay Cross, a friend and colleague in the Internet Time Alliance, spent the last years of his life raising awareness of the importance of ‘informal learning’ across the world. As early as 2002 Jay was describing the unrelenting focus on formal learning in terms of the ‘Spending/Outcomes Paradox’.

Jay talked about ‘the other 80%’, the informal learning that happens beyond the control, and often the sight, of the HR and L&D departments. Jay cited a number of studies and observations that supported this. They were briefly documented by Jay here.

More recently, researchers have been validating the importance of the learning that happens as part of the daily workflow. One example of is the work of Professor Andries de Grip and his team at the Research Centre for Education and the Labour Market at Maastricht University in the Netherlands. Professor de Grip’s 2015 report ‘The importance of informal learning at work’ explains that:

‘On-the-job learning is more important for workers’ human capital development than formal training’

and also that:

’Rapidly changing skill demands and rising mandatory retirement ages make informal learning even more important for workers’ employability throughout their work life. Policies tend to emphasize education and formal training, and most firms do not have strategies to optimize the gains from informal learning at work’

We’ve known for years that the ‘70+20’ are critical and that it’s in these zones that most learning happens. It’s now time to put this knowledge into action.

The 70:20:10 Institute has developed a full methodology based on 70:20:10 principles. This methodology is explained in detail in the book by Arets, Jennings and Heijnen ‘70:20:10 Towards 100% Performance’. Full information is available on the 70:20:10 Institute website.